28 January 2026

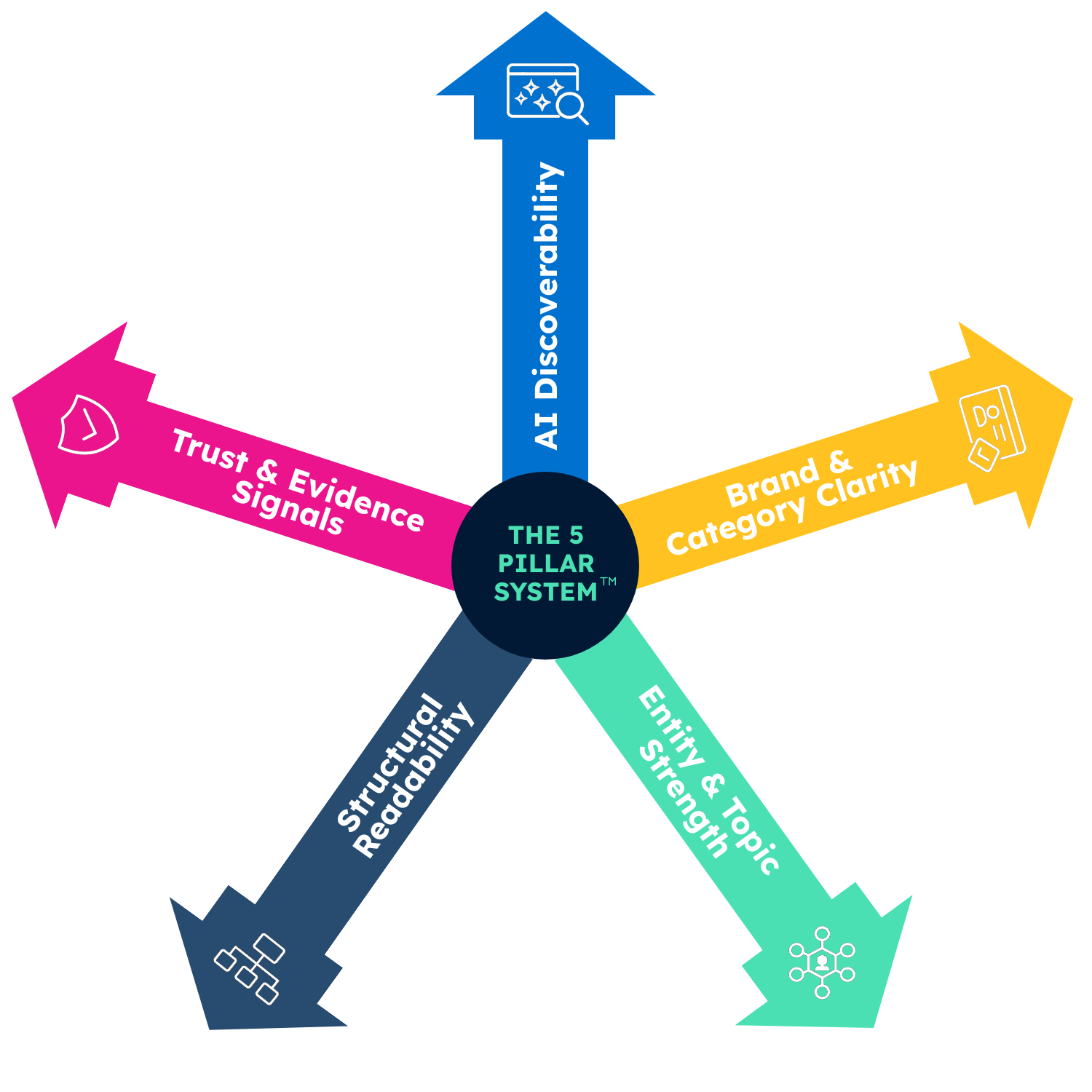

Law Firm Marketing Strategies in the AI Search Era: The Complete Guide for 2026 Law firm marketers face a problem that didn't exist three years ago. Clients are asking ChatGPT and Perplexity for legal recommendations, and those AI assistants return one trusted answer rather than a list of ten options. If AI doesn't understand your firm clearly enough to recommend it, you lose instructions to competitors who invested in visibility earlier. This guide covers everything from foundational website work and SEO to Generative Engine Optimisation, so your firm stays visible wherever clients search. Law firm marketing now spans two worlds: traditional search engines and AI assistants like ChatGPT, Perplexity, and Gemini. The firms winning instructions in 2026 are those AI can find, understand, and confidently recommend. This guide covers everything from foundational website work to Generative Engine Optimisation (G.E.O), so your firm stays visible wherever clients search. Book Your Free A.I Search Visibility Call Key Takeaways Law firm marketers drive client acquisition: They combine SEO, PPC, content, and reputation management, all tailored to legal compliance and client intake AI search changes the game: Clients now ask AI assistants for recommendations, and those assistants return one trusted answer rather than ten options Traditional SEO is necessary but not sufficient: Your firm also needs to be understandable and recommendable by large language models Trust signals determine AI recommendations: Accreditations, reviews, directory consistency, and entity clarity influence which firms AI suggests Measurable tactics exist: From website structure to AI visibility audits, every approach here is actionable and trackable How AI Search Is Changing the Way Clients Find Law Firms Law firm marketers are specialists, either in-house or agency-based, who drive client acquisition through tailored digital work. They combine SEO, PPC, content creation, social media, and reputation management, all adapted to legal compliance and client intake workflows. Yet something fundamental has shifted. AI assistants no longer return a list of websites when someone asks "who is the best employment lawyer near me." Instead, they return one main answer: the firm they believe is most credible. 93% of AI searches end without a website click, making it critical to appear in these AI-generated answers. This changes what marketing for law firms actually means. Your firm now needs to be findable (AI can locate information about you), understandable (AI correctly interprets what you do and who you serve), and recommendable (AI trusts you enough to suggest you over competitors). If any of these three elements is missing, you may not appear in AI-driven recommendations at all. What Is Law Firm Marketing Law firm marketing is the practice of attracting, engaging, and converting prospective clients through coordinated activities across multiple channels. It covers everything from networking at industry events to running targeted digital campaigns. Traditional Marketing for Law Firms Offline methods still play a role: referrals, sponsorships, speaking engagements, and print advertising. These build relationships and reinforce reputation. However, they no longer work in isolation. Clients who hear about you offline typically research you online before making contact. Digital Marketing for Law Firms Web marketing for law firms includes several interconnected disciplines: SEO (Search Engine Optimisation): Making your website rank higher in Google results for relevant searches PPC (Pay-Per-Click): Paid advertising where you pay when someone clicks your ad Content marketing: Publishing articles, guides, and videos that demonstrate expertise Social media: Building presence on LinkedIn, Twitter/X, and Facebook Email marketing: Nurturing relationships with prospects and past clients Each channel serves a different purpose, though they work best when coordinated. Generative Engine Optimisation for Law Firms G.E.O is the newest layer of lawyer marketing. It sits on top of traditional SEO and focuses specifically on making your firm discoverable and recommendable by AI search platforms. Where SEO asks "how do we rank in Google?", G.E.O asks "how do we get recommended by ChatGPT?" The disciplines overlap but are not identical. A firm can rank well in traditional search yet be invisible or misrepresented in AI answers. Why Every Law Firm Needs a Marketing Strategy "Surely good work speaks for itself?" It does, but only to people who already know about you. The challenge is reaching those who don't. Referrals Alone No Longer Fill the Pipeline Referrals remain valuable, often producing the highest-quality instructions. Yet relying solely on referrals creates vulnerability. When referral sources retire, move, or simply forget to mention you, the pipeline dries up without warning. Clients Research Law Firms Before Making Contact Even referred clients research firms independently before calling. They read reviews, compare websites, and check credentials. If your online presence is weak or confusing, you may lose the instruction before you know it existed. AI Assistants Now Influence Which Firms Get Recommended Here's the shift that matters most. When clients ask AI assistants for legal guidance, only firms that AI understands and trusts get recommended. If someone asks Perplexity "who handles commercial lease disputes in Manchester?", the AI doesn't show ten options. It names one or two firms it believes are credible. If your firm isn't clearly positioned for that query, you won't be mentioned. How Much Should a Law Firm Spend on Marketing Budgets vary based on firm size, practice areas, competitive landscape, and growth ambitions. There's no universal percentage that works for everyone. Law Firm Marketing Budget Benchmarks Firm SizeBudget ApproachSolo practitionersStart with time-intensive work like content and local SEOSmall firmsBalance between SEO, local listings, and selective paid advertisingMid-size and large firmsComprehensive approach across multiple channels including AI visibility The key is proportionality. A firm seeking aggressive growth invests more than one focused on maintaining current client levels. High-growth firms invest 16.5% of revenue in marketing, compared to just 2% for average firms. Where to Allocate Your Marketing Budget Priority areas typically include your website and SEO foundation (your primary marketing asset), local visibility through Google Business Profile and legal directories, content and thought leadership that demonstrates expertise, and AI search visibility as an emerging priority for forward-thinking firms. Law Firm Marketing Strategies for SEO and AI Search These ten approaches work for both traditional search engines and AI assistants. Each builds on the others, so consider them as a system rather than isolated tactics. 1. Build a Law Firm Website That AI Can Understand AI reads websites as flat documents, scanning from top to bottom. Clear hierarchy matters enormously. If your navigation is confusing or your page structure is inconsistent, AI struggles to interpret what your firm actually does. Focus on clean navigation, logical page structure, fast loading times, and mobile-friendly design. Bad structure equals bad AI interpretation. 2. Optimise for Search Engines and AI Assistants On-page SEO basics, including title tags, meta descriptions, and header tags, help both Google and AI understand your content. These elements signal what each page is about and how it relates to your firm's broader expertise. The same clarity that helps you rank in traditional search also helps AI correctly categorise your services. 3. Create Practice Area Pages with Entity Clarity Entity clarity means AI understands exactly what your firm does, who it serves, and where. Each practice area deserves its own page with clear definitions of services, ideal clients, and jurisdictions covered. Vague or combined pages confuse AI. A page titled "Our Services" that lists everything from conveyancing to criminal defence gives AI no clear signal about your specialisms. 4. Claim and Optimise Your Google Business Profile Your Google Business Profile feeds into AI knowledge systems. Accurate NAP (Name, Address, Phone), appropriate categories, detailed services, and regular updates all strengthen your visibility. This is often the fastest win available. Many firms have incomplete or outdated profiles. 5. Publish Content That Demonstrates Legal Expertise Content marketing builds topical authority. Blog posts, guides, FAQs, and legal updates that answer real client questions signal to both search engines and AI that your firm has genuine expertise. The key is relevance. Content that addresses questions your ideal clients actually ask performs better than generic legal commentary. 6. Build Trust Signals Through Reviews and Accreditations Trust signals are external indicators of credibility that AI uses to judge reliability: Client reviews: Google reviews, Trustpilot, testimonials Legal rankings: Legal 500, Chambers Professional memberships: Law Society, SRA registration Quality marks: Lexcel, Conveyancing Quality Scheme AI cross-references these signals when deciding which firms to recommend. A firm with strong, consistent trust signals feels safer to AI than one with claims but no verification. 7. Align Directory Listings for NAP Consistency NAP consistency means your Name, Address, and Phone number match exactly across every directory and profile. Inconsistencies, even small ones like "Street" versus "St.", create uncertainty for AI. Key legal directories include Chambers, Legal 500, Avvo, Justia, and FindLaw. Each listing reinforces or undermines your firm's credibility. 8. Use Structured Data to Help AI Interpret Your Firm Structured data (also called schema markup) is code that tells search engines and AI exactly what your content means. It's like adding labels to your website that machines can read. Relevant schema types include LocalBusiness, Attorney, LegalService, and FAQ. Implementation typically requires developer support but delivers lasting benefits. 9. Develop a Lawyer Marketing Plan for Social Media LinkedIn is the primary platform for legal professionals. It's where referrers, potential clients, and peers see your thought leadership. Twitter/X and Facebook serve supporting roles depending on your practice areas. Focus on demonstrating expertise rather than selling. Share insights, comment on legal developments, and engage with your professional community. 10. Run Targeted PPC Campaigns for Immediate Visibility Pay-per-click advertising provides immediate visibility while SEO and AI visibility build over time. Legal keywords are competitive and expensive, so targeting matters enormously. Focus on high-intent searches where someone is actively seeking legal help, not just researching general legal topics. How to Make Your Law Firm Visible to AI Assistants Traditional SEO is necessary but not sufficient for AI visibility. This section covers what else your firm needs. How AI Models Decide Which Law Firms to Recommend AI assistants surface firms they find credible based on clarity, entity signals, and trust evidence. When someone asks "who is the best family lawyer in Manchester?", AI looks for the firm it can understand and trust most. This isn't about who has the most content or the biggest budget. It's about who AI can confidently recommend without risk of being wrong. What Is an AI Visibility Audit for Law Firms An AI Visibility Audit reviews how AI tools, including ChatGPT, Perplexity, Gemini, and Claude, currently describe and recommend your firm. It reveals gaps between how you see yourself and how AI sees you. Many firms discover that AI misunderstands their practice areas , confuses them with competitors, or simply doesn't mention them at all. The audit identifies exactly what to fix. The Five Pillars of AI Search Visibility The 5 Pillar System™ provides a framework for understanding AI visibility: AI Discoverability: Whether and how AI surfaces your firm Brand and Category Clarity: How clearly AI classifies what you do Entity and Topic Strength: Depth of your firm's expertise signals Structural Readability: How well AI can parse your website Trust and Evidence Signals: External credibility indicators AI uses Weakness in any pillar undermines overall visibility. Strength across all five creates compounding benefits. How to Measure Law Firm Marketing Success What gets measured gets improved. Both traditional and AI-specific metrics matter. Traditional Metrics for Attorney Marketing Website traffic: Visitors to your site Conversion rate: Percentage of visitors who enquire Cost per lead: What you spend to acquire each enquiry Return on investment: Revenue generated versus marketing spend AI Visibility Metrics to Track AI mentions: How often AI assistants reference your firm AI description accuracy: Whether AI describes your firm correctly Recommendation frequency: Whether you appear in AI-generated lists Competitor positioning: Where you rank relative to competitors in AI answers Should You Hire a Law Firm Marketing Agency or Go In-House The right approach depends on your firm's resources, expertise, and growth goals. When to Handle Marketing for Your Law Firm In-House In-house works well when you have internal marketing talent and time to dedicate. Advantages include cost control and deep brand familiarity. The challenge is keeping skills current as the landscape evolves. When to Hire a Legal Marketing Agency Agencies suit firms wanting comprehensive digital marketing management without building internal teams. Look for agencies with genuine legal sector experience. Generic marketing agencies often miss compliance nuances. When to Work with an AI Search Specialist AI search specialists suit firms that have traditional SEO in place but remain invisible to AI assistants. This is targeted expertise in an emerging discipline, focused specifically on how large language models interpret and recommend businesses. Why Your Firm Needs to Be Found and Recommended by AI Search The shift from "list of ten options" to "one recommended answer" is already happening. Clients asking AI assistants for legal guidance receive a single firm name, not a comparison table. If your firm isn't understood and trusted by AI, you won't be that recommendation. The instructions will go elsewhere, often to competitors who invested in AI visibility earlier. Book Your Free A.I Search Visibility Call to understand how AI currently sees your firm and what to fix first. FAQs About Marketing for Law Firms What is the difference between SEO and G.E.O for law firms? SEO focuses on ranking in traditional search engine results pages. G.E.O (Generative Engine Optimisation) focuses on making your firm discoverable and recommendable by AI assistants like ChatGPT and Perplexity. Both are necessary. SEO builds the foundation, and G.E.O ensures AI can interpret and trust what you've built. How do I check if AI assistants recommend my law firm? Ask AI tools directly. Type queries like "best [practice area] lawyer in [location]" into ChatGPT, Perplexity, and Gemini and see if your firm appears. For a structured analysis, request a Free AI Snapshot Audit . What trust signals do AI assistants use when recommending law firms? AI assistants look for accreditations (Legal 500, Chambers, Law Society), consistent directory listings, client reviews, media mentions, and clear practice area definitions. These external signals help AI verify that your firm is what it claims to be. Can small law firms compete with large firms in AI search visibility? Absolutely yes. AI assistants prioritise clarity and relevance over firm size. A small firm with well-structured content and strong trust signals in a specific practice area can outperform larger generalist competitors who spread their signals too thin. How long does law firm marketing take to produce results? Traditional SEO typically takes several months to show meaningful ranking improvements. PPC delivers immediate traffic. AI visibility improvements can often be seen within weeks of implementing structural and trust signal changes, though building lasting authority takes longer.